Welcome to Hetu!¶

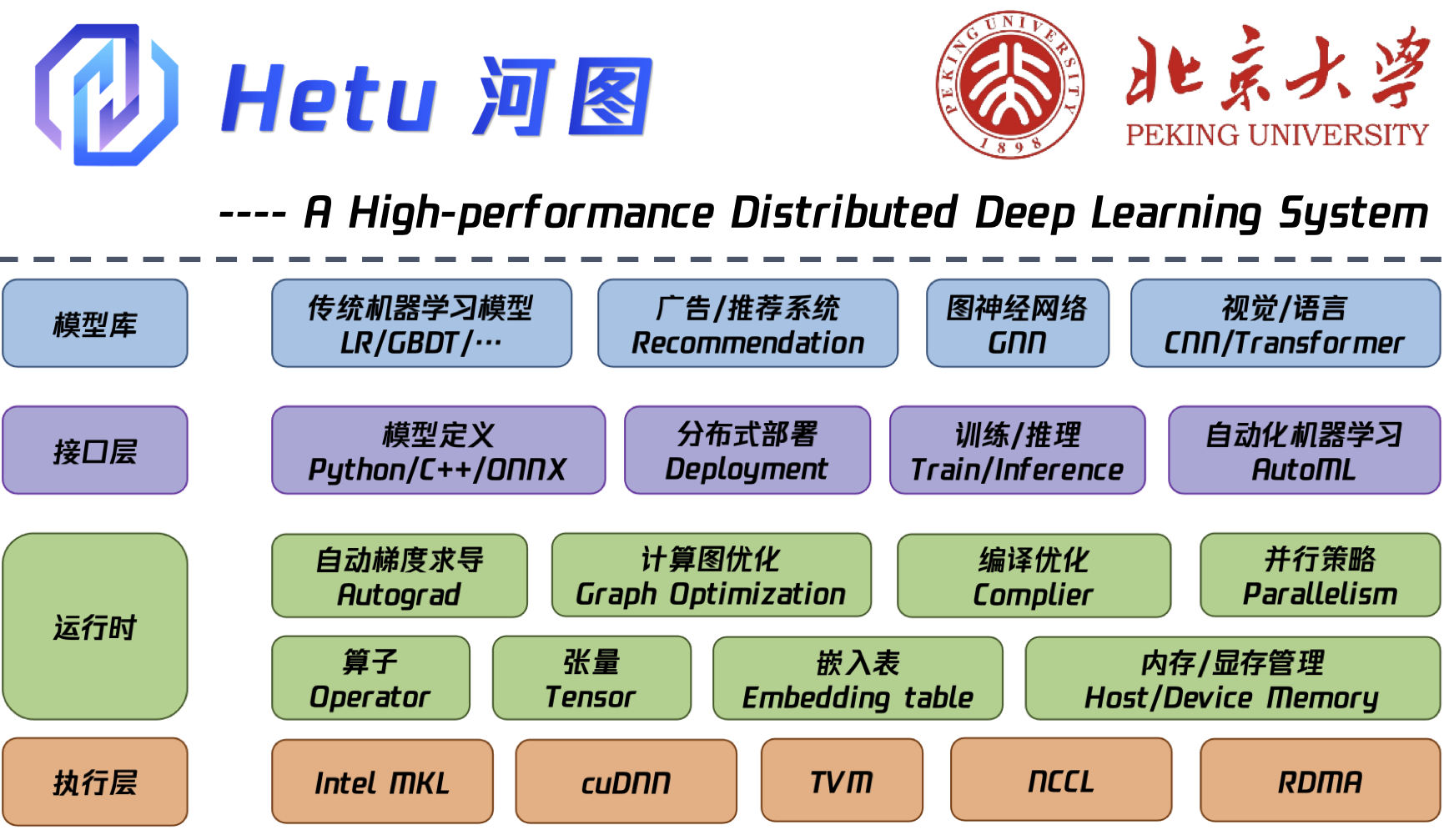

Hetu is a high-performance distributed deep learning system, developed by the team of Professor Bin Cui from Peking University (past work targets distributed ML systems, including Angel). The system is completely decoupled from the existing DL systems. It takes account of both high availability in industry and innovation in academia, which has a number of advanced characteristics:

Applicability. DL model definition with standard dataflow graph; many basic CPU and GPU operators; efficient implementation of more than plenty of DL models and at least popular 10 ML algorithms.

Efficiency. Achieve at least 30% speedup compared to TensorFlow on DNN, CNN, RNN benchmarks.

Flexibility. Supporting various parallel training protocols and distributed communication architectures, such as Data/Model/Pipeline parallel; Parameter server & AllReduce.

Scalability. Deployment on more than 100 computation nodes; Training giant models with trillions of model parameters, e.g., Criteo Kaggle, Open Graph Benchmark

Agility. Automatically ML pipeline: feature engineering, model selection, hyperparameter search.

This project is supported by the National Key Research and Development Program of China, and some related results have been published on high-level CCF-A conferences. The project is arranged to be open sourced recently at: https://github.com/PKU-DAIR/Hetu.

We welcome everyone interested in machine learning or distributed systems to contribute code, create issues or pull requests. Please refer to Contribution Guide and Development plan for more details.

News¶

2021.08.06 [NEW] We anounced the cooperation with Tencent for Angel 4.0 and Hetu: link.

2021.07.31 [NEW] We provided a symposium on ACM TURC 2021 to introduce Hetu: link.

2021.07.28 [NEW] We launched a contest with openGCC for Hetu development: link.

2021.07.18 [NEW] We released Hetu on GitHub: https://github.com/PKU-DAIR/Hetu.

Overview

Features

Tutorials

Development plan